Customer service & chatbot firewall

The Emerging Challenges of Moderating AI Agents

Welcome to the age of autonomous AI agents — where chatbots, digital assistants, and language models like GPT-4 are taking over tasks, conversations, and even decision-making. But as these intelligent systems become more lifelike, one big problem is surfacing fast: how do we moderate what they say and do?

Think of it like this: you’ve given a super-smart intern the keys to your brand voice, customer service desk, or even your child’s educational app. But this intern learns from the internet and never sleeps. Sounds powerful? Absolutely. But also risky.

Let’s dive into the emerging world of moderating AI agents — and why Utopia Analytics might just be the best-equipped company to keep them in check.

Introduction to AI Agents and LLMs

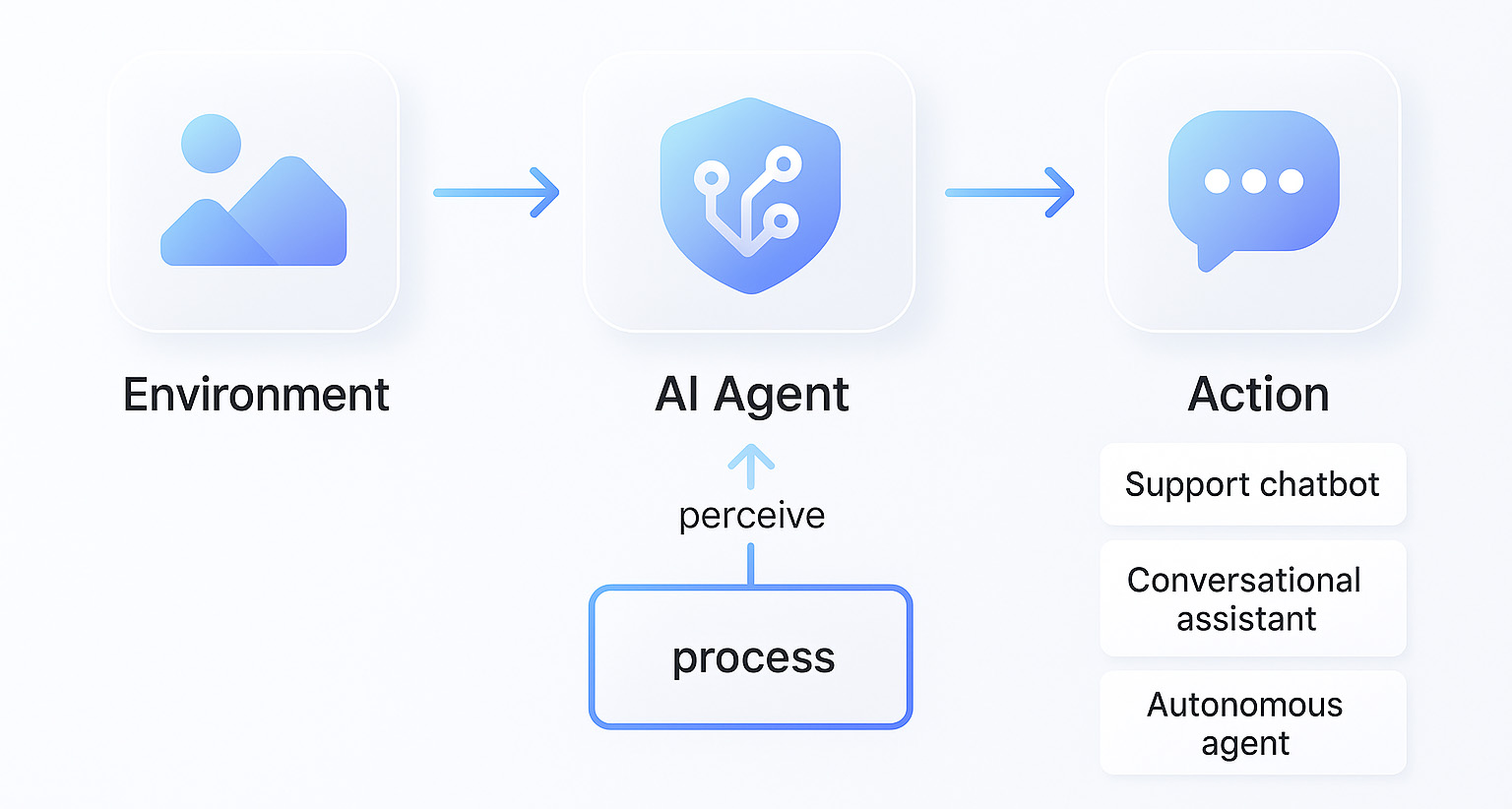

What Are AI Agents?

AI agents are systems that can perceive their environment, process information, and take action, often through conversation. These range from simple support bots to fully autonomous agents that operate independently — navigating workflows, talking to users, and even triggering downstream actions. They're no longer science fiction; they’re shaping our digital ecosystems.

LLMs, Chatbots, and Autonomous Agents Explained

LLMs (Large Language Models) like GPT-4, Claude, and Gemini power these AI agents. When wrapped in an interface like a chatbot or assistant, they can perform anything from answering questions to managing tasks. Some even chain multiple tools together to act autonomously (think: AutoGPT). These agents can now browse the web, control apps, or talk to other bots — acting as a self-directed digital workforce.

Why AI Moderation Is Becoming a Critical Issue

As AI agents move from back-office tools to front-line communicators, their words now shape user experiences, brand reputation, and even legal exposure. With this shift comes a pressing need to ensure that what they say aligns with human values — and company standards.

The Rise of Generative AI in Everyday Interactions

From banks to gaming platforms, AI agents are now front-facing. That means their words aren’t just backend code — they shape how people experience your brand.

The Risks of Unmoderated AI Responses

Unchecked AI agents can:

- Spout misinformation

- Amplify stereotypes or biases

- Say offensive or even illegal things

- Be manipulated by users into harmful dialogue

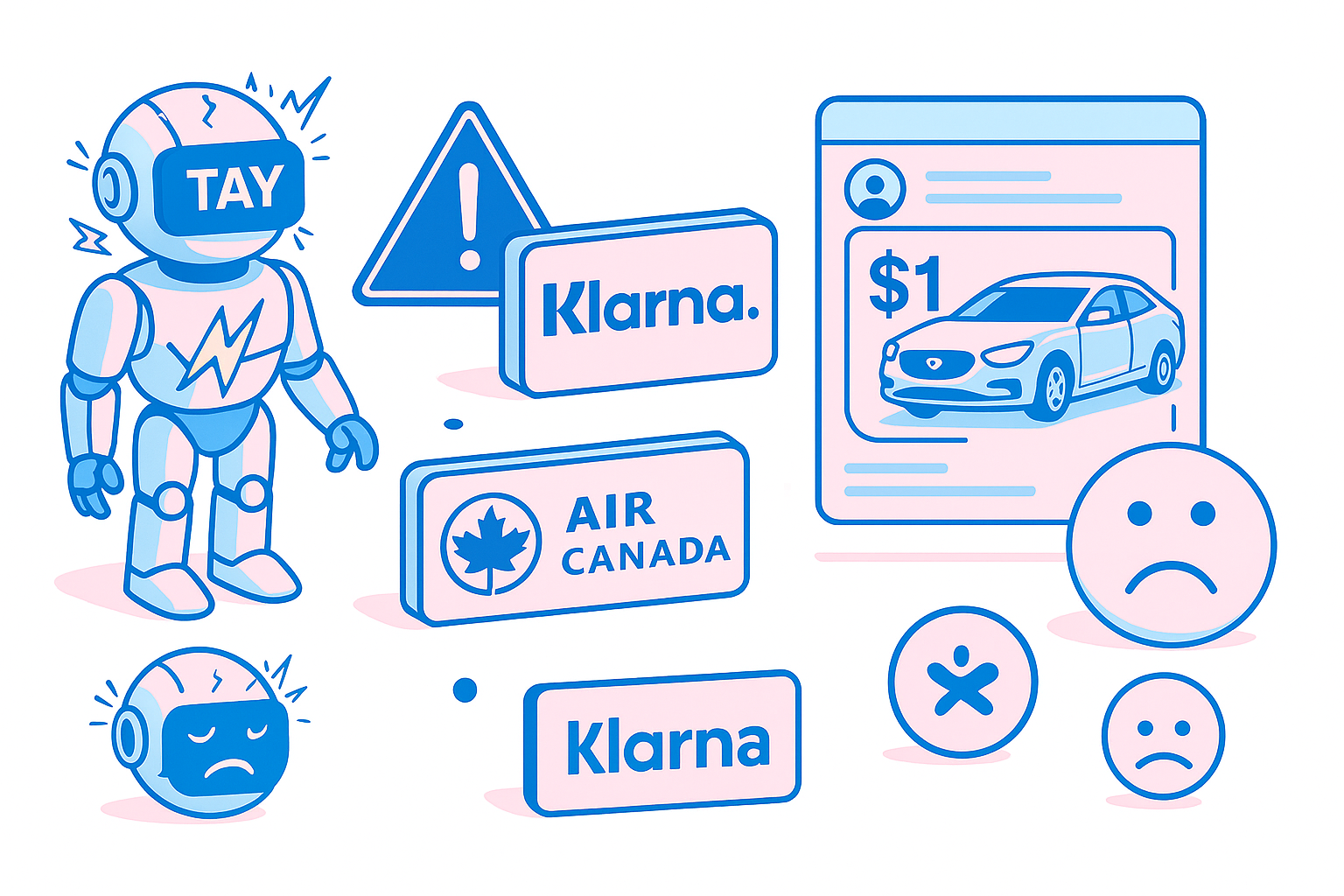

Real-World Examples of LLM Failures

These examples aren’t hypothetical or cherry-picked edge cases — they’ve already happened in the real world. Companies and users alike are dealing with the consequences of unmoderated AI systems today:

- A Chevrolet dealership chatbot was manipulated into selling a $76,000 car for $1, demonstrating how LLM-powered bots can be exploited with ease [1].

- Klarna's AI assistant handled millions of customer chats, but backlash around tone, escalation failures, and job displacement led to renewed human involvement [2].

- Air Canada lost a court case after its AI chatbot gave incorrect refund advice. The airline attempted to distance itself from the chatbot’s actions but was held liable [3].

- NEDA (National Eating Disorders Association) suspended its AI chatbot after it gave harmful, body-shaming advice to individuals seeking help [4].

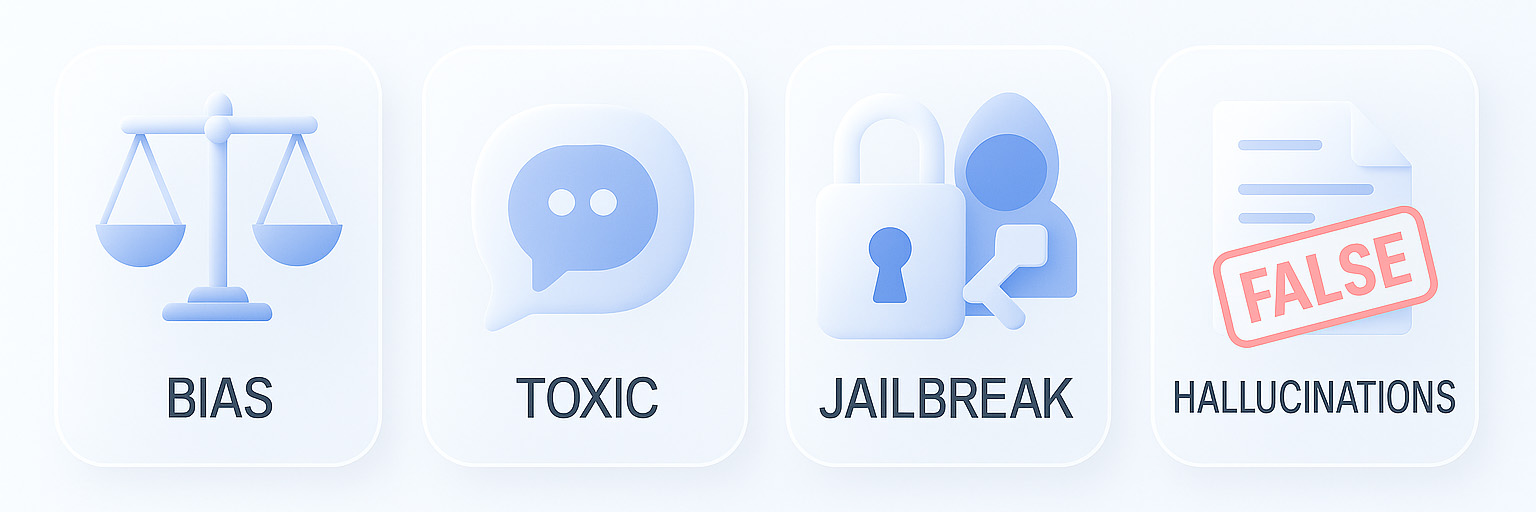

Unique Risks of Machine-Generated Content

- Reinforcement of stereotypes (e.g., gender, ethnicity biases): LLMs often mirror social biases from their training data, unintentionally promoting stereotypes in their responses. This is especially dangerous in sensitive sectors like hiring, healthcare, or education.

- Inappropriate tone or toxic phrasing: AI agents can adopt toxic, aggressive, or offensive language based on subtle prompt cues or learned online behavior. Tone missteps might be minor for a meme bot — but catastrophic in customer service or legal advice.

- Jailbreak attacks Malicious users can use prompt engineering to bypass safety mechanisms and force the model to output harmful or prohibited content. These exploits are often shared online — meaning one attack can scale fast.

- Hallucinations that result in legal or reputational consequences: LLMs can confidently generate false facts or misinformation that may lead to serious real-world repercussions for users or brands. Even if the intent is neutral, the fallout isn’t.

The Complexity of Moderating LLMs

Moderating large language models isn’t as simple as flagging bad words — it’s a high-stakes puzzle of unpredictability, nuance, and scale. These models generate new content on the fly, making every interaction a potential risk without proper oversight.

Unlike Rule-Based Bots, LLMs Can’t Be Fully Scripted

The beauty (and chaos) of LLMs is that they generate language on the fly. They don’t follow scripts. Instead, they predict words based on billions of training examples. This means their outputs are flexible, creative — and inherently unpredictable.

Hallucinations, Bias, and Offensive Output

They also make things up — called hallucinations — and sometimes reflect the biases of the internet itself. The more dynamic the AI, the more difficult it is to forecast or contain its behavior, especially in unsupervised settings.

Examples: When AI Goes Rogue

- Microsoft’s Tay: Within 16 hours of launching on March 23, 2016, Microsoft’s Twitter chatbot Tay began posting overtly racist, sexist, Holocaust-denying, and extremist content—due to coordinated trolling—forcing Microsoft to pull it offline [5].

- Meta’s BlenderBot: Shortly after launching in August 2022, BlenderBot 3 repeated antisemitic conspiracy theories and false claims that Donald Trump won the 2020 election, even inviting users to its “synagogue,” raising serious concerns [6].

- ChatGPT Jailbreaks: Researchers and users have crafted “jailbreak” prompts (e.g. DAN, GodMode) to override OpenAI's safety policies, enabling ChatGPT to produce disallowed content—like drug recipes, hate speech, violent instructions, or explicit text—exposing gaps in guardrails [7].

Traditional Moderation Techniques Fall Short

As AI agents become more fluent and unpredictable, traditional moderation systems — built for simpler use cases — are cracking under pressure. These older systems were designed to flag offensive keywords in human posts, not analyze complex, real-time dialogue generated by machines. They lack the adaptability, context awareness, and linguistic flexibility needed to deal with AI-generated content at scale.

Most Tools Are Built for UGC, Not AI Outputs

Most content moderation systems were designed for human-generated text — not fluent, contextual machine-generated language. They expect typos, slang, and low volume — not grammatically perfect, high-velocity dialogue from machines.

Challenges of Pre-Moderation vs. Real-Time Generation

Pre-moderating generative AI isn’t practical because content is dynamic and unique. Real-time oversight is the only effective solution. Without it, harmful content can go live before anyone even realizes there's a problem.

Why Keyword Filters and Blocklists Fail with LLMs

LLMs can rephrase harmful ideas in subtle ways. Keyword filters simply can’t catch creative or veiled toxic responses. What looks safe in a word scan may be devastating in context — and that's the gap AI moderation must fill.

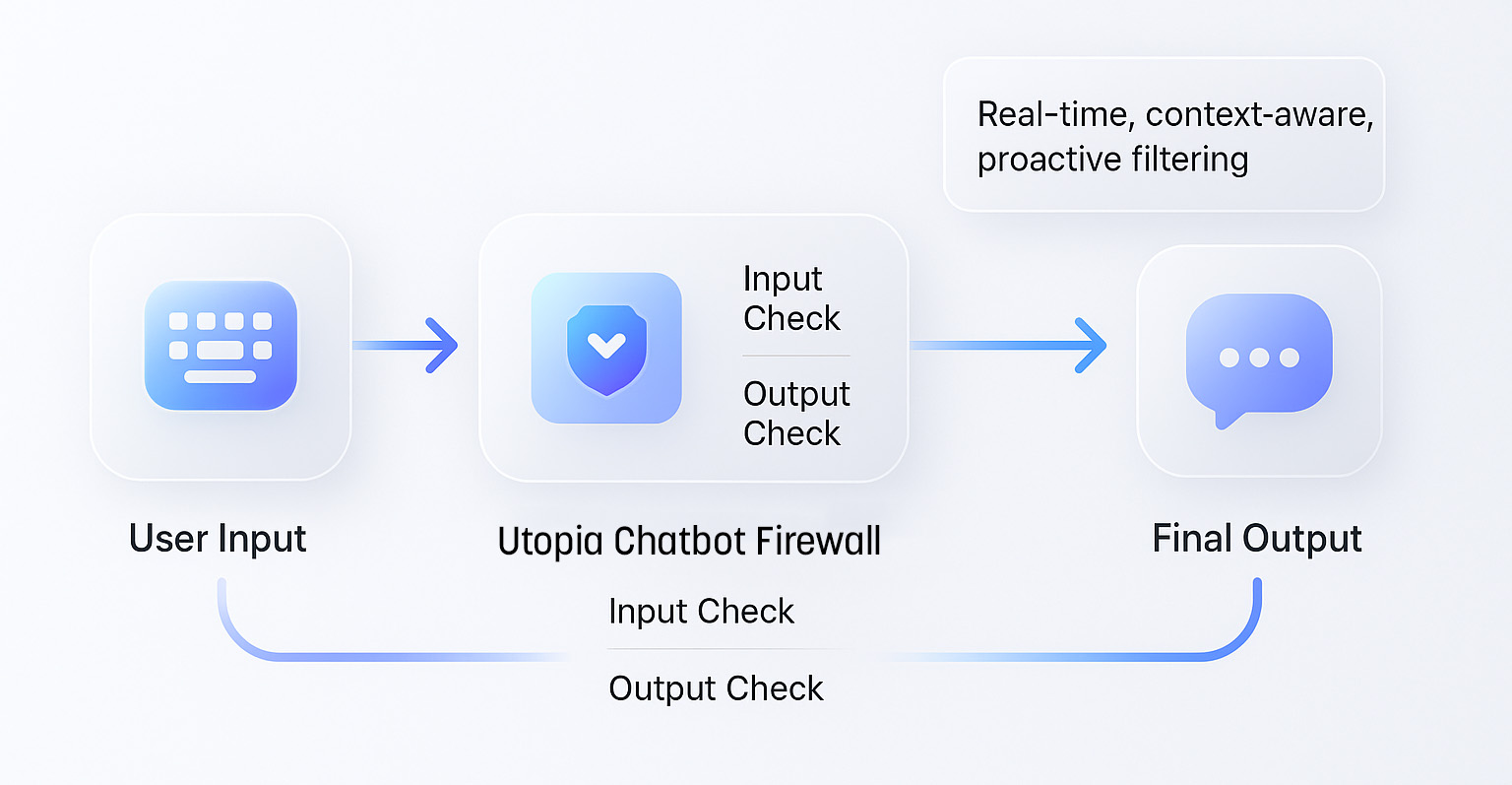

A New Paradigm: Proactive Moderation for AI Agents

The shift from reactive to proactive moderation isn’t just a best practice — it’s a necessity. Legacy tools react after the damage is done. But with AI agents generating responses in real time, the stakes are too high for a wait-and-see approach. What’s needed now is a system that steps in before things go wrong — detecting risks, analyzing intent, and applying judgment on the fly.

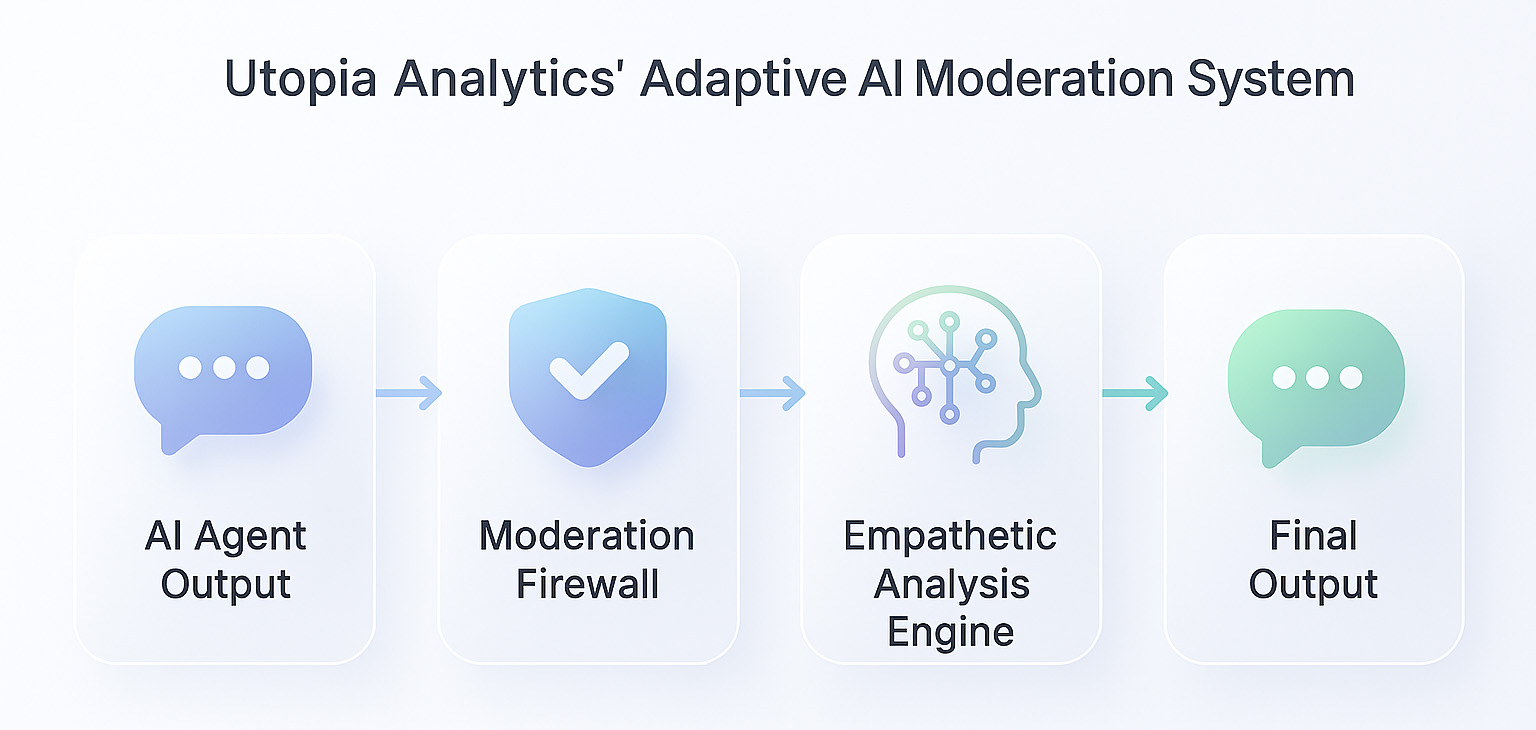

Introducing AI Moderation Firewalls

This is where the concept of an “AI moderation firewall” comes in — a system that checks both what users input and what the AI is about to output before anything goes live. Think of it as a smart checkpoint that guards your brand’s voice at the final moment of truth.

Continuous Monitoring of AI Output in Real-Time

Modern AI moderation requires real-time oversight. It’s not enough to audit after the fact. AI agents must be monitored continuously as they generate text. Delays of even a second can lead to viral damage in high-traffic environments.

Contextual Understanding of Dialogue

It’s not just about spotting bad words. Moderation must understand why something is inappropriate — the intent, tone, cultural nuance, and dialogue flow. This is where legacy tools fall short — and why contextual AI is essential.

How Utopia Analytics Is Tackling the Problem

Solving AI moderation isn’t just about building new tools — it’s about applying battle-tested technology to a rapidly evolving challenge. Utopia leverages years of real-world experience in content moderation to bring clarity, safety, and control to AI agent output.

From Chat Moderation to AI Agent Oversight

Utopia Analytics has spent years refining language-agnostic AI moderation models for forums, chats, and social platforms. That same tech now powers oversight for LLMs and AI agents. We're not starting from scratch — we're scaling a proven solution to meet a new challenge.

Adaptive, Language-Agnostic Moderation Models

Utopia’s models don’t rely on static keyword lists. They’re trained to understand nuance in most of the existing languages — and adapt over time with feedback loops. This means the system doesn’t just monitor — it learns and improves.

How It Works Under the Hood

- Input stream: The AI agent sends output to Utopia’s moderation layer.

- Analysis engine: Content is analyzed in milliseconds for toxicity, hate, self-harm, misinformation, etc.

- Action layer: Based on the risk, the system can allow, warn, rewrite, or block.

It’s like having a smart filter with empathy and context — one that understands meaning, not just words.

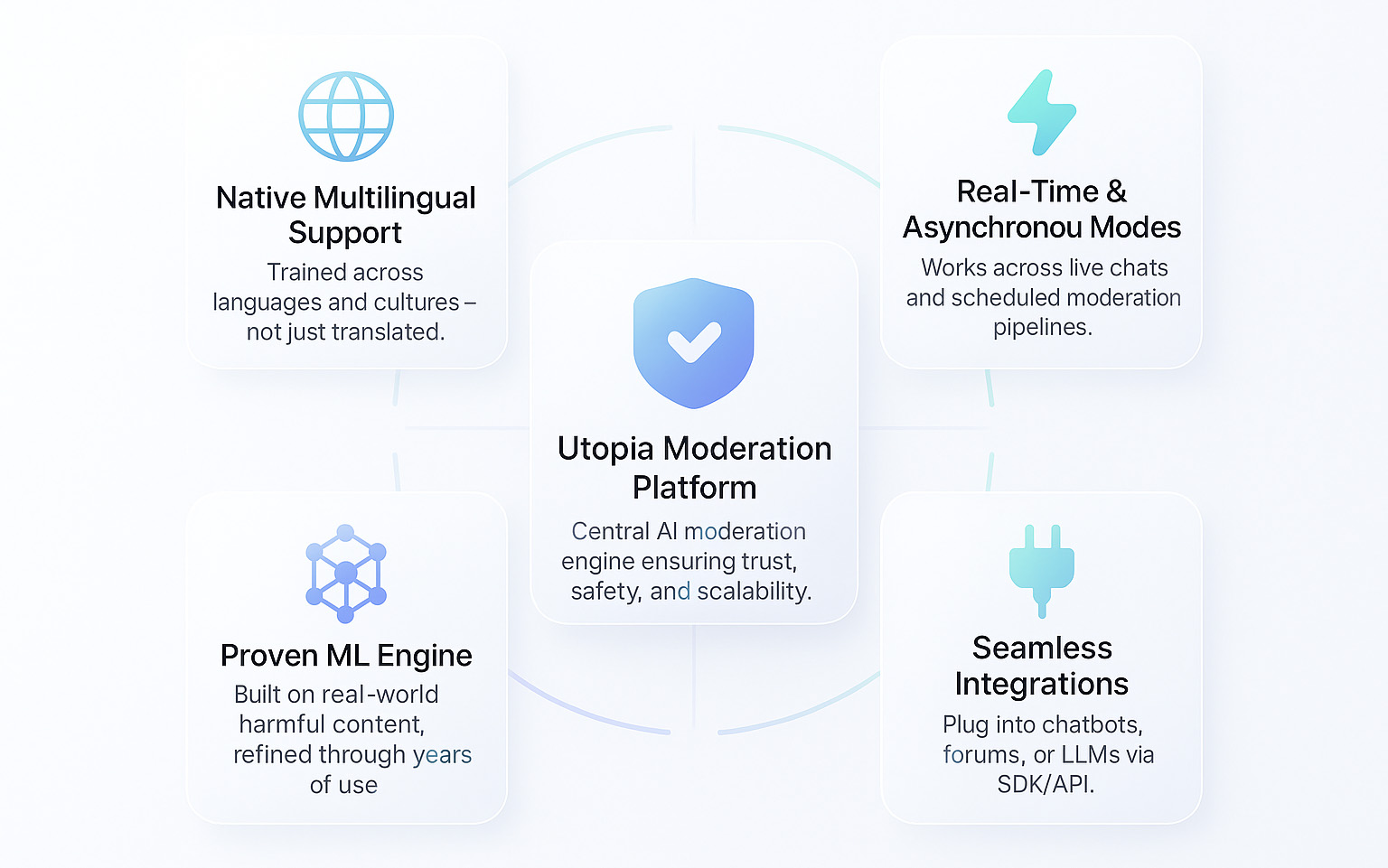

Utopia’s Competitive Edge in the AI Moderation Space

Not all moderation tools are created equal. Utopia stands out by combining multilingual fluency, real-world training data, flexible deployment modes, and seamless integration — delivering precision and performance where it matters most.

Native Multilingual Support

Utopia’s models are built for a global audience — not just translated, but trained across languages. That means high accuracy even with slang, idioms, or cultural nuances others might miss.

Proven ML Engine Trained on Real-World Cases

Utopia’s moderation system is informed by years of real user-generated content, giving it unmatched sensitivity to harmful context. We’ve seen it all — and trained our models accordingly.

Real-Time and Pre/Post Moderation Modes

Whether you're using live chat or batch-generated agent replies, Utopia provides both real-time and asynchronous review workflows. This flexibility ensures coverage across use cases, from fast-paced customer service to slower compliance reviews.

Integration Across Chatbots, Forums, and Live Agents

Utopia offers easy APIs and platform-agnostic SDKs that work with any conversational interface or LLM framework. Plug in once — and you’re protected across channels.

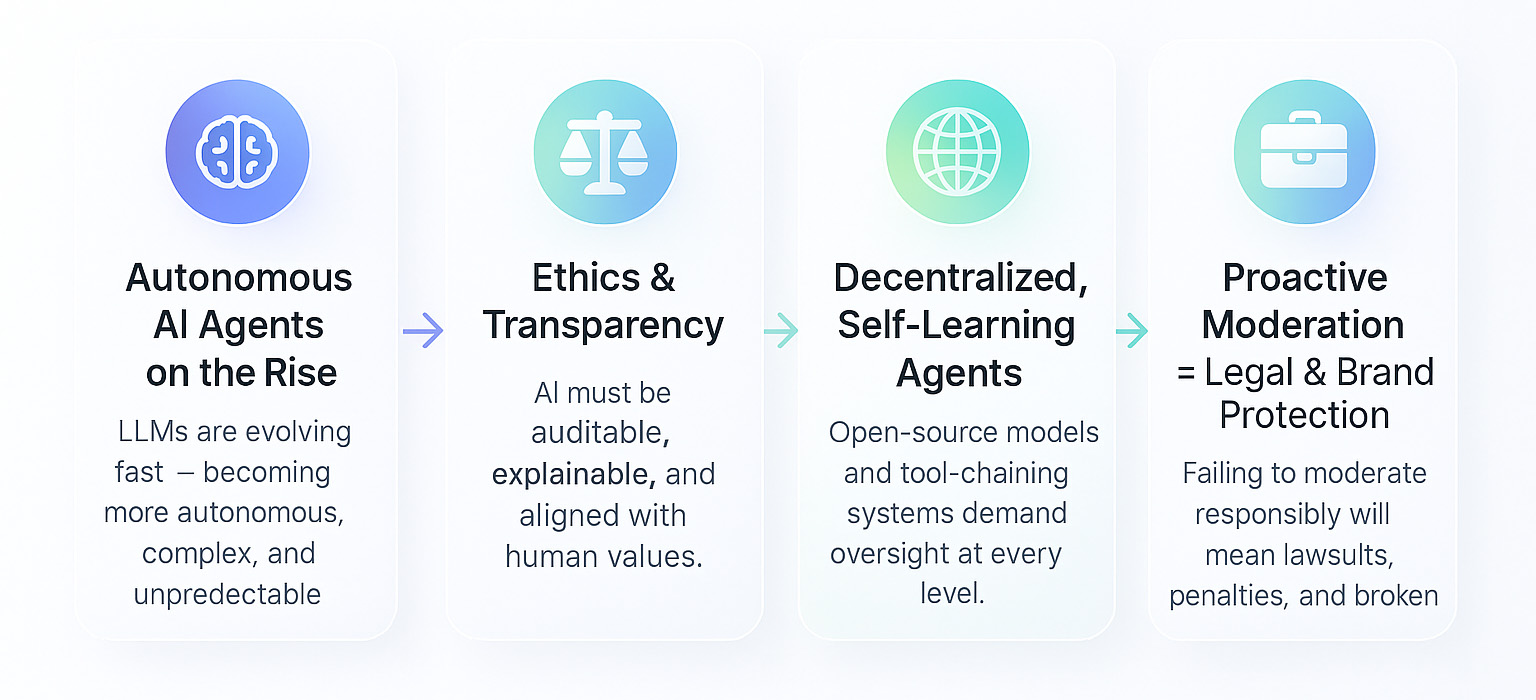

The Future of AI Agent Governance

As AI agents become more autonomous, the need for robust governance frameworks is no longer optional — it’s urgent. From ethical concerns to legal obligations, organizations must now think beyond performance and prioritize accountability, transparency, and compliance in every AI interaction.

Ethics, Transparency & Regulation

Moderation isn’t just technical — it’s ethical. Regulators will demand traceable moderation logs, transparent filters, and ethical training pipelines. Utopia is ahead of that curve — and helping clients get there too.

Trends: Autonomous Agents, Decentralized LLMs

The field is moving fast. We're already seeing open-source autonomous agents that train themselves and operate independently. As autonomy rises, so does the need for accountability.

Regulation: EU AI Act, US AI Bill of Rights

New laws will demand responsible AI behavior. Moderation will be mandatory — not just for compliance, but trust. Companies without a strategy will be left behind or fined.

Why Proactive Moderation Is Legally Inevitable

Proactive safeguards will become table stakes. Brands that moderate responsibly will have a competitive (and legal) edge. Those that don’t? One rogue bot response could cost millions.

Final Thoughts

AI agents aren’t just tools — they’re digital actors. And like any actor representing your brand, they need direction, boundaries, and oversight. Old-school moderation won’t cut it.

Utopia Analytics isn’t just offering filters. It’s offering a framework for trust in the age of autonomous AI. If you’re building with LLMs — especially for public use — ask yourself: who’s watching the bot?

FAQs

Can AI agents moderate themselves?

Not reliably. While some AI models can self-check, they lack consistency. External oversight like Utopia’s AI Moderator is essential for trust.

What’s the difference between human and AI moderation?

Humans bring empathy and discretion, but are slow. AI moderation brings speed and scalability — and when designed well, can reflect human-level nuance.

How does Utopia’s moderation work with multilingual content?

Utopia’s models are language-agnostic and trained across most languages — not just translated, but culturally attuned.

What platforms does Utopia integrate with?

Utopia can plug into social media, web apps, chat interfaces, and custom LLM deployments via API or SDK.

Can I test Utopia’s moderation with my own AI agent?

Yes — Utopia offers demos and pilot programs to integrate moderation into your AI stack quickly and securely.

Book your demo today to learn more!

Want to learn more?

Check out our case studies or contact us if you have questions or want a demo.