The Complete Guide to User Generated Content Moderation (UGC) in 2025

User-generated content (UGC) is one of the most powerful tools on the internet today. Whether it’s product reviews, social media comments, or videos shared on a platform, UGC helps build trust, grow communities, and increase engagement. But with great engagement comes great responsibility.

Without proper moderation, UGC can expose your platform and users to harmful content, such as hate speech, fraud, harassment, or spam. This is why content moderation isn’t just a “nice-to-have” anymore. It’s a must.

In this guide, we’ll break down everything you need to know about moderating user-generated content in 2025, what it means, why it matters, the risks involved, and the best solutions to keep your platform safe.

What Is User-Generated Content Moderation?

User-generated content moderation is the process of reviewing, filtering, and managing content submitted by users. This can include text, images, audio, or video posted on social media, forums, marketplaces, dating apps, e-commerce review sections, and more.

The goal of UGC moderation is to ensure the content follows your community guidelines and legal requirements, so users can engage in a safe, respectful environment.

Moderation can be handled by human moderators, AI-powered tools, or a combination of both. The more content your platform handles, the more you’ll benefit from an automated or AI-assisted solution.

Why Is UGC Moderation So Important?

Protects Your Brand and Users

When users encounter toxic content, like harassment or scams, they lose trust in your platform. And when advertisers see your name tied to inappropriate content, they walk away. Effective moderation stops harmful content before it spreads and protects your brand image.

Fulfills Legal Obligations

Regulations like the EU’s Digital Services Act (DSA) or the U.S. Children’s Online Privacy Protection Act (COPPA) demand content control. Failing to remove harmful or illegal content can result in major fines and lawsuits.

Builds Healthier Communities

Moderated platforms foster safer, more welcoming spaces. When people feel safe, they’re more likely to return, participate, and recommend your platform to others.

Increases Revenue Opportunities

Safer environments are better for business. Advertisers are more likely to work with platforms that manage content responsibly. And users are more likely to stay engaged when they trust the space.

Best Practices for UGC Moderation in 2025

User-generated content can be your platform’s biggest asset, or its biggest risk. The way you moderate that content makes all the difference. Here are the best practices smart platforms are following in 2025 to keep things safe, respectful, and thriving.

1. Make the Rules Clear and Actually Useful

Don’t bury your content guidelines in legal jargon or tiny footer links. Write them in plain language, keep them visible, and show examples. Your users shouldn’t have to guess what’s okay to post, and your moderators will thank you for the clarity. A transparent set of rules helps everyone stay on the same page and builds trust with your community.

2. Let AI Do the Heavy Lifting, Humans Handle the Nuance

AI is great at handling huge volumes of content, flagging spam, detecting profanity, and scanning images in real time. But it’s not perfect, especially when it comes to sarcasm, satire, or cultural context. That’s where humans step in. The best moderation systems use both: AI for speed and scale, and trained human reviewers for the gray areas.

3. Act Fast, Real-Time Moderation Matters

In the world of content, speed isn’t a bonus, it’s a necessity. Harmful posts left unchecked, even for a few minutes, can go viral and cause real damage. That’s why real-time moderation is the gold standard. The faster you act, the safer your users feel, and the more credibility your platform earns.

4. Speak Everyone’s Language. Literally

If your platform is global, your moderation needs to be too. That means more than just translating rules; it means understanding slang, tone, and context in every language your users speak. Using language-agnostic tools ensures moderation stays fair and accurate, no matter where your users are or how they write.

5. Invest in Your Moderators. They’re the Frontline

Your human moderators handle the toughest cases, emotional content, and tough decisions. Provide them with proper training, mental health support, and the right tools. A well-supported team makes better decisions and stays strong in a role that’s crucial to your platform’s success.

6. Let Your Users Help Out

Your community isn’t just there to consume content; they can help protect it too. Give users simple, intuitive tools to report inappropriate posts. When users feel like their voice matters, they’re more likely to stay engaged and help shape a better space for everyone.

7. Keep Learning and Adjusting

Content trends change. Slang evolves. And bad actors get more creative. That’s why moderation needs to be a living process. Review your flagged content, analyze what slipped through, and adjust your rules or retrain your AI based on what you learn. Platforms that continuously adapt stay one step ahead of trouble.

What are the Common Risks of Unmoderated UGC?

- Hate speech and discrimination

- Spam, scams, and phishing links

- Graphic or violent content

- Child exploitation material

- Misinformation or defamation

- Personal data leaks (email, phone numbers, etc.)

Even one bad post going viral can hurt your platform’s credibility and cost thousands (or more) in damage control.

Key Moderation Methods for UGC

There’s no one-size-fits-all approach when it comes to moderating user-generated content. Different platforms use different methods, depending on how much content they handle and how quickly they need to act. Here are the main types of moderation you’ll come across:

Pre-Moderation

With this method, every piece of content is reviewed before it appears publicly. It offers the highest level of control, you can be sure nothing harmful slips through, but it can slow things down, especially on fast-moving platforms. It’s often used in communities where safety or accuracy is a top priority, like forums for kids or health-related discussions.

Post-Moderation

Here, content goes live right away and is reviewed after the fact. If it breaks the rules, it’s taken down. It’s much faster than pre-moderation and works well if your team has tools in place to catch harmful posts quickly. But if you don’t have real-time support, there’s always a risk that users might see something inappropriate before it’s removed.

Reactive Moderation

This relies on users to flag inappropriate content. Once it’s reported, moderators step in to review and take action. It’s helpful as a safety net, but not strong enough to be your main method, especially if you’re trying to build a safe, trusted space. Still, it’s worth having as part of a broader strategy.

Automated Moderation

Using AI or automated filters, this method scans content as soon as it’s submitted. It’s ideal for high-traffic platforms because it works instantly and at scale. A good automated system can detect things like profanity, spam, hate speech, or inappropriate images without human input. That said, it works best when it’s customized to fit your community’s specific rules.

Hybrid Moderation

This is the approach most modern platforms aim for. It blends the speed of automation with the judgment of human moderators. AI takes care of the bulk, filtering out clear violations, while humans handle the edge cases or anything that needs context.

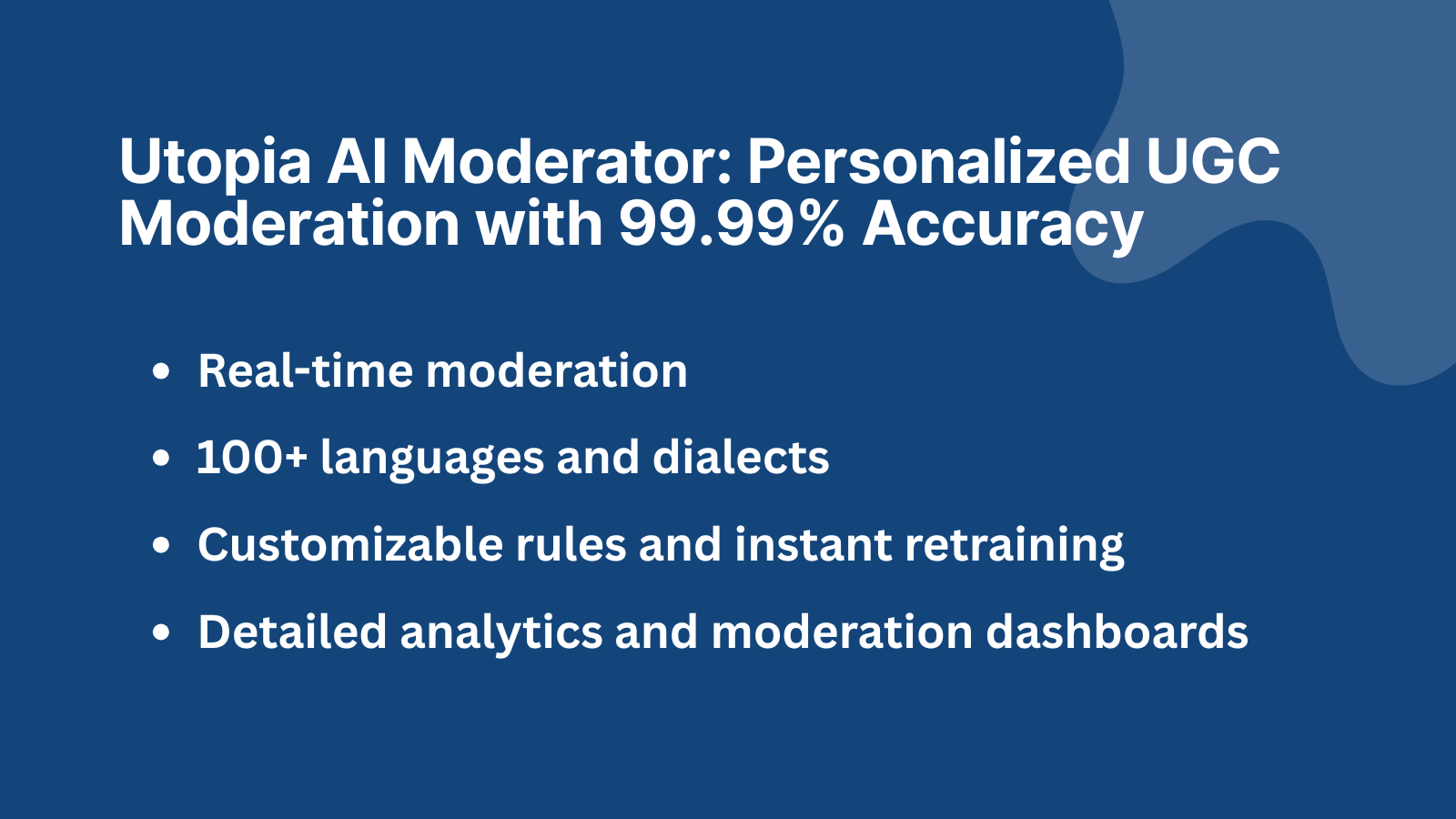

How Utopia AI Moderator Moderates User-Generated Content

Moderating large volumes of UGC, across multiple languages and formats, is no small task. That’s where Utopia AI Moderator comes in, a fully personalized, real-time content moderation solution that adapts to your specific platform and policies.

Unlike many generic moderation tools, Utopia doesn’t rely on predefined keyword filters or off-the-shelf models. It learns from your own publishing rules and human decisions to provide up to 99.99% accuracy in moderation.

Whether you’re managing a social platform, review site, dating app, or gaming community, Utopia’s language-agnostic AI can handle text, images, and even audio, detecting everything from profanity and harassment to spam and phishing links.

Integration is simple, with most clients fully up and running in just 2–3 days. Utopia AI Moderator offers real-time moderation, supports over 100 languages and dialects, and allows for customizable rules and instant retraining. Additionally, it provides detailed analytics and moderation dashboards to track performance.

Want to see how it works in action? Book a demo and discover how platforms like Decathlon and StarStable use Utopia AI to create safer user experiences and reduce moderation workloads by over 90%.

Final Thoughts

User-generated content is here to stay, and it’s growing fast. But to fully benefit from UGC, platforms need to take moderation seriously.

Ignoring harmful content puts users at risk, invites legal trouble, and weakens your brand’s trust. On the flip side, smart moderation creates a space where people feel safe, engaged, and loyal.

Frequently Asked Questions

1. What exactly is UGC moderation, and why does it matter so much?

UGC moderation is really just about keeping your platform safe by checking what users are posting, whether it’s comments, reviews, photos, or videos. It’s important because the internet doesn’t always bring out the best in people. Without some kind of filter, things like hate speech or scams can slip through and hurt your community. A bit of moderation goes a long way in building trust and keeping things running smoothly.

2. How do big platforms usually handle moderation?

They usually don’t rely on one method. It’s a mix, automated tools take care of the bulk (like obvious spam or profanity), and human moderators step in when something needs a second look. It’s not perfect, but it’s the most realistic way to keep up with the constant flood of content.

3. What kind of stuff should be moderated?

Pretty much anything users can submit, text, images, video, even audio. A comment section, a product review, a profile picture, if it’s user-generated, it should be looked at before it causes problems. It’s not about being overly strict, it’s about keeping your space safe and on-brand.

4. Can AI handle moderation on its own?

It’s good, but it’s not magic. AI can do a lot, especially when it’s trained properly, but humans still play an important role. The best results usually come from combining both. For example, Utopia AI Moderator learns from your team’s decisions and handles the repetitive stuff, so people can focus on the tricky edge cases.

5. What if people try to get around the filters?

People do try to game the system, changing letters, using symbols, or weird spellings. That’s why old-school filters don’t always work. But Utopia AI doesn’t rely on exact matches, it looks at meaning. So even if someone tries to hide a bad word, it can usually still catch it.

6. Does moderation help or hurt engagement?

It actually helps a lot. When users know they’re not going to get harassed or spammed, they’re more likely to stick around and join the conversation. A cleaner, safer space leads to better interactions and, over time, a stronger community.

Want to learn more?

Check out our case studies or contact us if you have questions or want a demo.