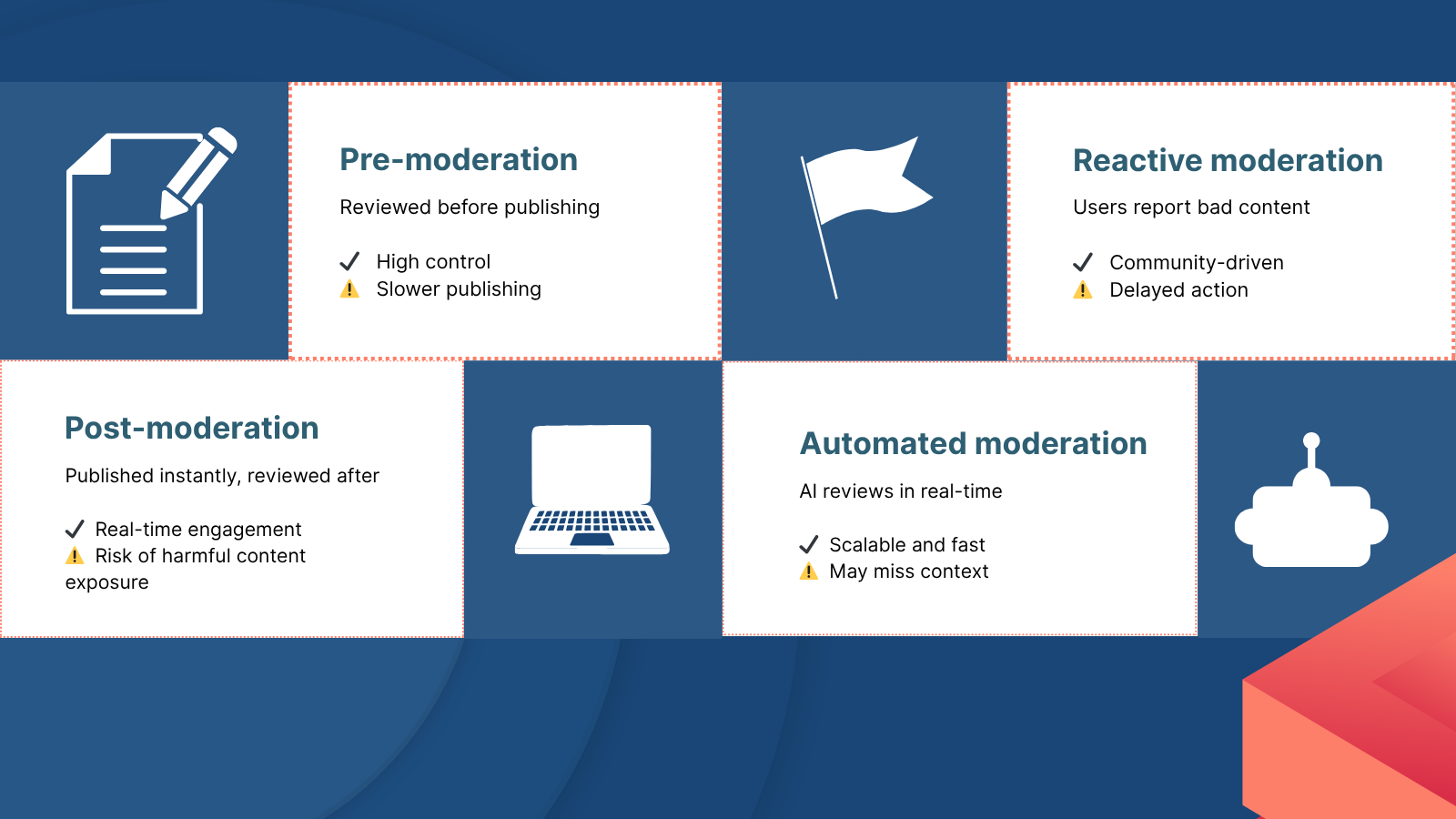

4 Types of Content Moderation: Ensuring a Safe and Engaging Digital Space

Content moderation helps keep online platforms safe, welcoming, and in line with the law. Since people post so much online these days, it’s more important than ever to keep harmful or misleading content in check. Platforms that implement robust moderation strategies can foster healthy user engagement while maintaining a positive reputation.

In this article, we will dive into 4 types of content moderation, exploring how each method works, their advantages, and the best use cases for each one. From the early days of human moderation to the rise of AI-driven automation, content moderation has evolved to meet the growing needs of online platforms.

1. Pre-Moderation: Full Control Before Content Goes Live

In pre-moderation, content is checked before anyone else can see it. Moderators review posts to make sure they follow the rules before they’re published. Moderators, whether human or automated, screen posts, comments, images, videos, or other forms of content to ensure they meet platform guidelines and community standards. Only content that passes the review process is approved and made public.

How It Works:

In pre-moderation, all user submissions are held in a queue. Moderators review each piece of content based on specific criteria, such as appropriateness, relevance, legality, and compliance with the platform’s rules. If the post follows the rules, it gets published. If not, it’s either flagged for a closer look or removed.

Advantages:

- High Control: Offers the highest level of control over what content is published. Content that violates rules is prevented from going live, ensuring that harmful or offensive material is kept off the platform.

- Prevents Damage: Effective in protecting users from encountering harmful content, such as hate speech, graphic violence, or explicit material, before it’s published.

- Brand Reputation: Keeps your brand looking good by blocking content that could hurt your image or upset your audience.

Disadvantages:

- Slower Posting: Because everything is checked first, there’s often a wait before users see their content go live. This can be frustrating for users who expect real-time engagement.

- Resource-Intensive: Pre-moderation requires significant resources in terms of human moderators or automated systems. Platforms with a large volume of content may find it difficult to keep up with the demand.

- User Experience Impact: The delay in publishing content can create a less dynamic experience, especially in platforms that thrive on real-time conversations and engagement.

Best Use Cases:

- E-commerce Websites: Where content, such as product reviews or customer feedback, must be carefully vetted to ensure compliance with brand standards and legal requirements.

- Children’s Platforms: Websites designed for younger audiences, where safety and appropriateness are critical, benefit from pre-moderation to prevent exposure to harmful content.

- Legal and Healthcare Platforms: Sites that deal with sensitive information, such as legal advice or medical content, need high levels of control to protect users and comply with industry regulations.

2. Post-Moderation: Real-Time Publishing with Post-Publication Checks

With post-moderation, users can post right away, and the content gets reviewed afterward. Content is made publicly available, and if it violates platform rules, moderators will take action to remove, edit, or flag the content for further review.

How It Works:

Once content is uploaded, it goes live for users to see. Moderators then review the content at their convenience, checking it against community guidelines and legal requirements. If the content violates any policies, it is removed or flagged for further action. Some platforms also rely on automated systems to assist in post-moderation, quickly identifying and removing harmful content.

Advantages:

- Instant Interaction: Content is visible immediately, allowing users to engage with posts and participate in discussions without delay. This is essential for platforms that rely on user interaction and real-time content.

- Faster Publishing: By allowing content to be published instantly, post-moderation encourages users to contribute more frequently, knowing their posts will be live without waiting for approval.

- More Dynamic Experience: Since content is published instantly, the platform can maintain a lively, fast-paced environment, which is critical for social media platforms, forums, and news websites.

Disadvantages:

- Exposure to Harmful Content: Because content goes live right away, people might see something harmful before it gets taken down. This can create an unpleasant or unsafe environment for users.

- Heavy Burden on Moderators: Given that content goes live first, moderators must act quickly to remove harmful content before it can spread. This requires efficient processes and systems in place to handle the volume.

- Losing Trust: If bad content stays up too long, people might stop trusting the platform.

Best Use Cases:

- Social Media Platforms: Where users expect instant content publication and interaction. Post-moderation allows for dynamic discussions but requires swift action to remove harmful content.

- News Websites: For real-time updates on breaking news and events, post-moderation allows for fast publishing while still maintaining content quality and safety.

- Online Communities and Forums: Platforms that prioritize free-flowing discussions, such as Reddit, can benefit from post-moderation, provided they have sufficient moderation resources.

3. Reactive Moderation: User-Driven Content Reporting

Reactive moderation is one of the types of content moderation that relies on users to report content they believe violates the community guidelines. This type of moderation shifts the responsibility for identifying harmful content to the community rather than relying solely on moderators.

How It Works:

Users can click a “report” button to flag content they think breaks the rules. When enough users flag a particular post, the content is reviewed by moderators, who can then remove or take further action. The reporting system may also be automated to prioritize urgent reports or identify patterns of abuse.

Advantages:

- Cost-Effective: By relying on users to identify inappropriate content, reactive moderation reduces the need for a large team of moderators. This can save resources and time for platform owners.

- Empowers the Community: Users play an active role in maintaining the platform’s integrity, creating a more democratic and engaged user experience.

- Scalable: The more users a platform has, the more help it gets in spotting bad content. Larger user bases mean more people reporting content, which can help maintain the platform’s safety.

Disadvantages:

- Delayed Action: Since content remains visible until flagged by users, harmful or offensive material can be seen by many before it is addressed, potentially causing harm.

- Misuse: Some users might report content just because they disagree with it, not because it breaks any rules.

- Inconsistent Moderation Quality: The effectiveness of reactive moderation depends on the willingness and ability of users to accurately report violations, which can be inconsistent.

Best Use Cases:

- Online Forums: Communities like Reddit or Quora, where users are actively involved in content discussions and can report inappropriate behavior or material.

- Online Marketplaces: Sites like eBay, where people can report scams or rude messages, work well with reactive moderation, making it easier to address issues without relying solely on staff.

4. Automated Moderation: AI-Driven Content Review

Automated moderation is one of the most advanced types of content moderation, using AI tools to check content as soon as it’s posted, identifying harmful content based on learned patterns. These systems are designed to identify harmful content, such as hate speech, explicit imagery, or abusive language, based on predefined rules or learned patterns. AI-powered systems can detect violations faster and at a larger scale than human moderators.

How It Works:

AI systems are trained to recognize specific patterns in content—whether that’s certain words, images, or behaviors that violate platform rules. Once the content is flagged, the system can automatically remove or block it. Advanced AI tools, such as Utopia AI Moderator, can go beyond simple keyword detection by analyzing the context, tone, and even slang used in content, allowing for more accurate moderation.

Advantages:

- Speed and Efficiency: AI can process massive amounts of content in real time, enabling platforms to moderate millions of posts, comments, images, and videos without human intervention.

- 24/7 Monitoring: AI tools work day and night, so content is checked no matter when it’s posted, making them ideal for global platforms with users in different time zones.

- Consistency and Scalability: Unlike human moderators, AI does not tire or become inconsistent. AI-driven moderation can scale effortlessly to handle increased traffic and content flow.

Disadvantages:

- Context Issues: AI often misses sarcasm or cultural references, so it might make mistakes when deciding what’s okay.

- False Positives/Negatives: Automated systems may incorrectly flag harmless content or fail to detect subtle violations, which could lead to user frustration or exposure to harmful material.

- Training and Maintenance: AI models require continuous training and updates to stay effective, which can be resource-intensive and require ongoing supervision.

Best Use Cases:

- Large-Scale Platforms: Social media giants like Facebook, Instagram, or Twitter, which handle millions of posts daily, benefit from automated moderation to keep content safe without overloading human moderators.

- E-commerce Sites: Online marketplaces that feature user-generated reviews, like Amazon or eBay, use automated moderation to ensure reviews meet the platform’s policies while scaling efficiently.

- News Websites and Blogs: News platforms can utilize AI to automatically flag harmful content, such as fake news or offensive comments, ensuring that published articles remain reputable.

Selecting the Right Moderation Strategy

Content moderation plays a big role in keeping online spaces safe, fun, and within the rules. Whether you choose pre-moderation for full control, post-moderation for fast publishing, reactive moderation to empower users, or automated moderation for scalability, understanding each method’s strengths and weaknesses is key to finding the right solution for your platform. Picking the right moderation method helps platforms stay safe, follow the rules, and keep their communities healthy.

Content Moderation Tools: Keeping Your Platform Safe and Efficient

With so much content being shared every day, it can be tough to keep things safe and respectful on your platform. Content moderation tools help by automatically checking text, images, and audio to make sure everything meets your community standards.

Why It Works:

- Instant Moderation: Content is checked right away, so harmful posts are caught before they can spread. This keeps the environment safe and positive for everyone.

- Customizable Rules: You can set the moderation rules to match what’s best for your platform, whether it’s social media, an online store, or a forum.

- Works in Any Language: No matter where your users are from, these tools can handle all kinds of content, including slang and informal language.

With the right tools in place, platforms can save time, reduce costs, and create a better experience for users. Whether you’re running an e-commerce site, a social network, or a gaming community, moderation tools are essential for keeping things running smoothly.

Want to learn more?

Check out our case studies or contact us if you have questions or want a demo.